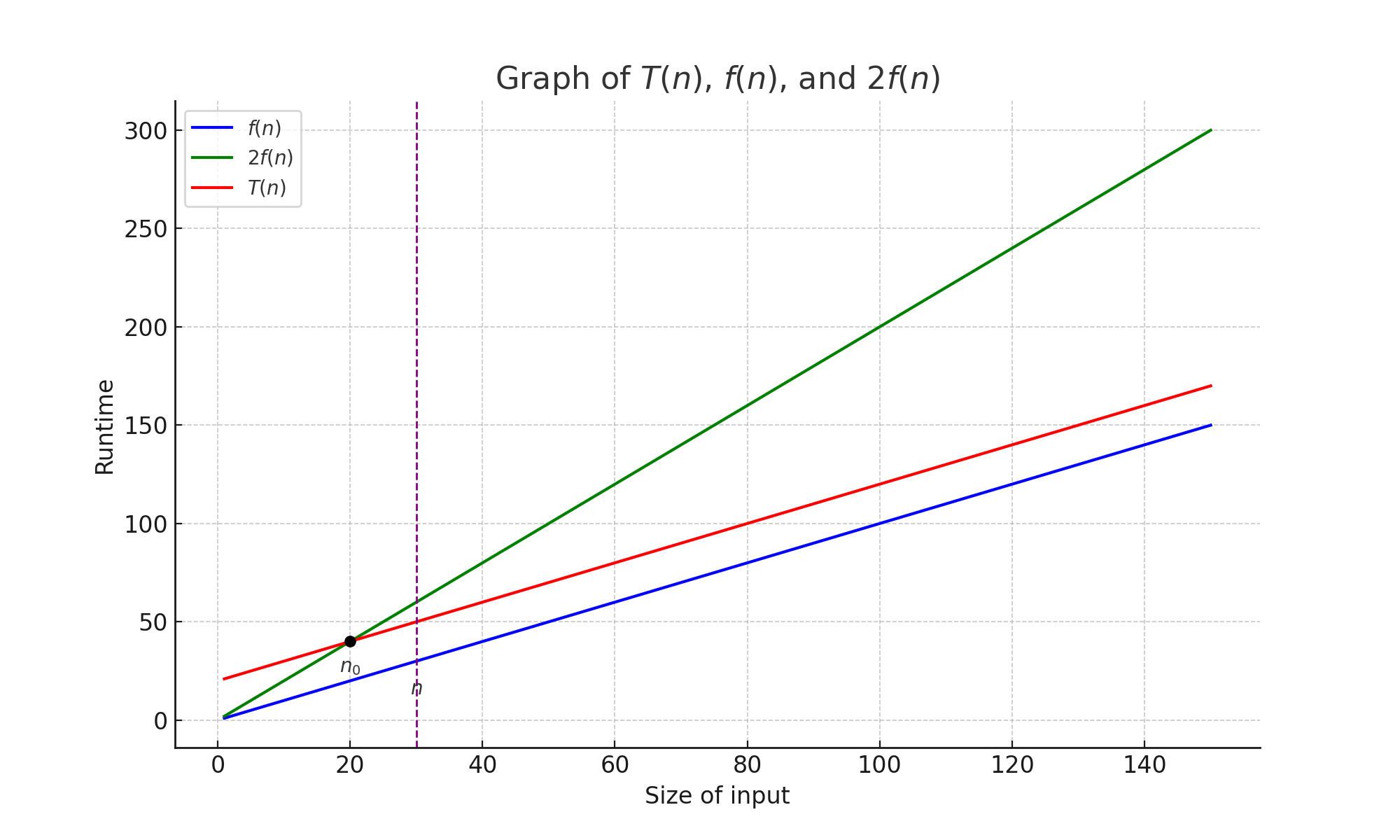

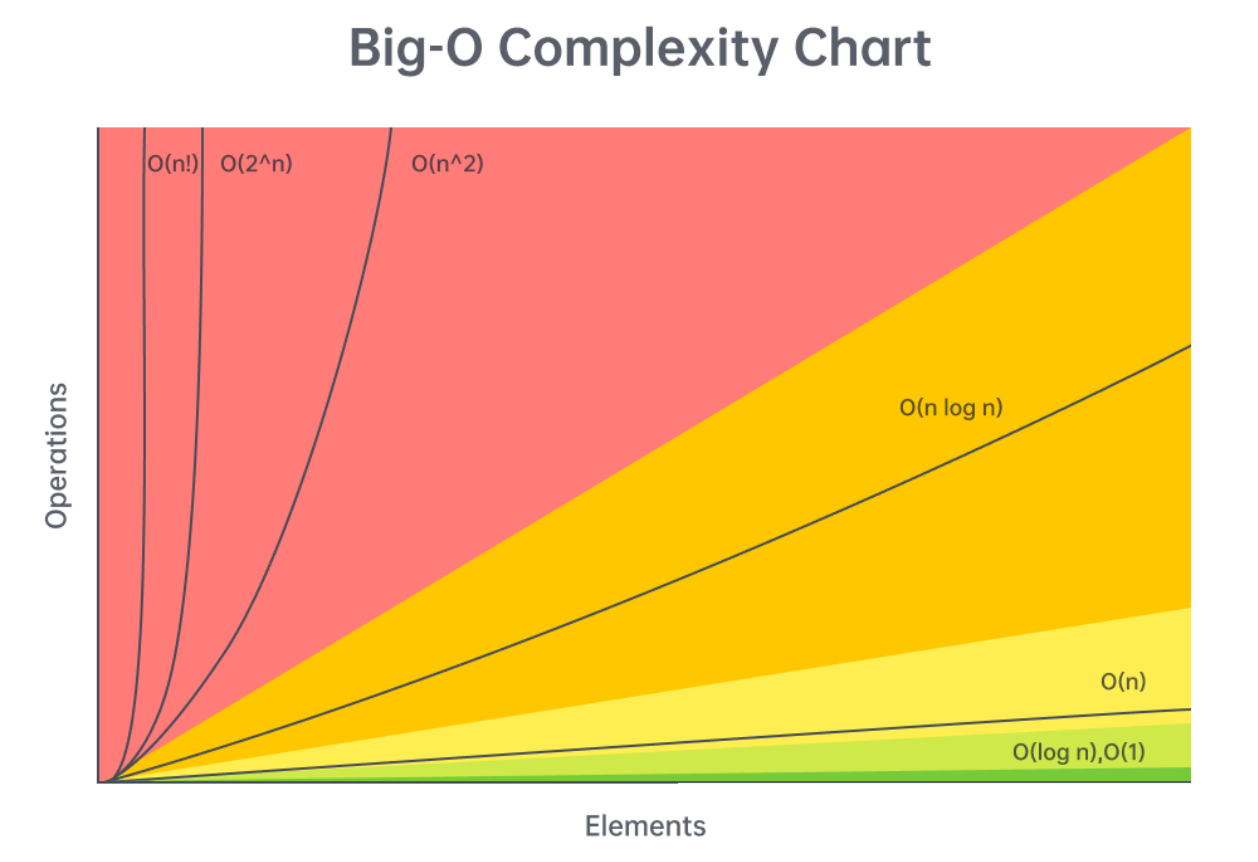

Big-O notation is a generalized metric used to describe the upper bound of an algorithm's runtime or space complexity, expressing how an algorithm's performance scales as input size increases.

Definition

$$ f(n) = O(g(n)) \text{ if there exist constants } c > 0 \text{ and } n_0 > 0 \text{ such that } 0 \leq f(n) \leq c \cdot g(n) \text{ for all } n \geq n_0. $$

Characterstics

It is essential to consider constants sincne Big-O represents an upper bound on the growth rate of an algorithm's complexity.

Consider:

$$ T(n) = n \log n $$ $$ U(n) = 50 n $$

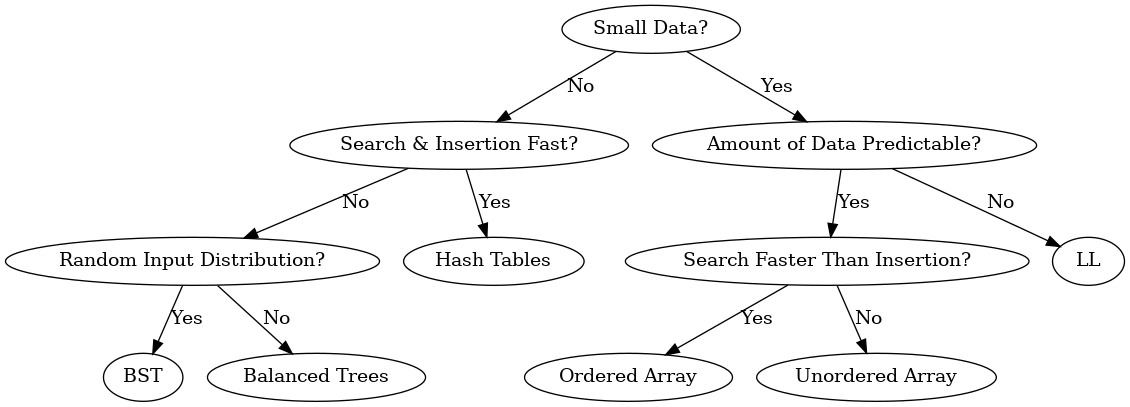

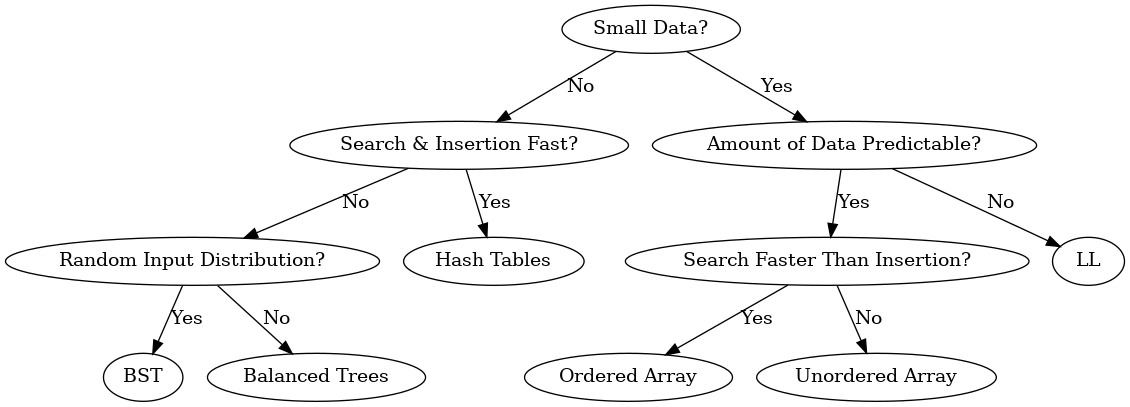

General-Purpose Data Structures

Special-Purpose Data Structures (Abstract Data Types (ADTs))

Sorting and Searching

Graphs